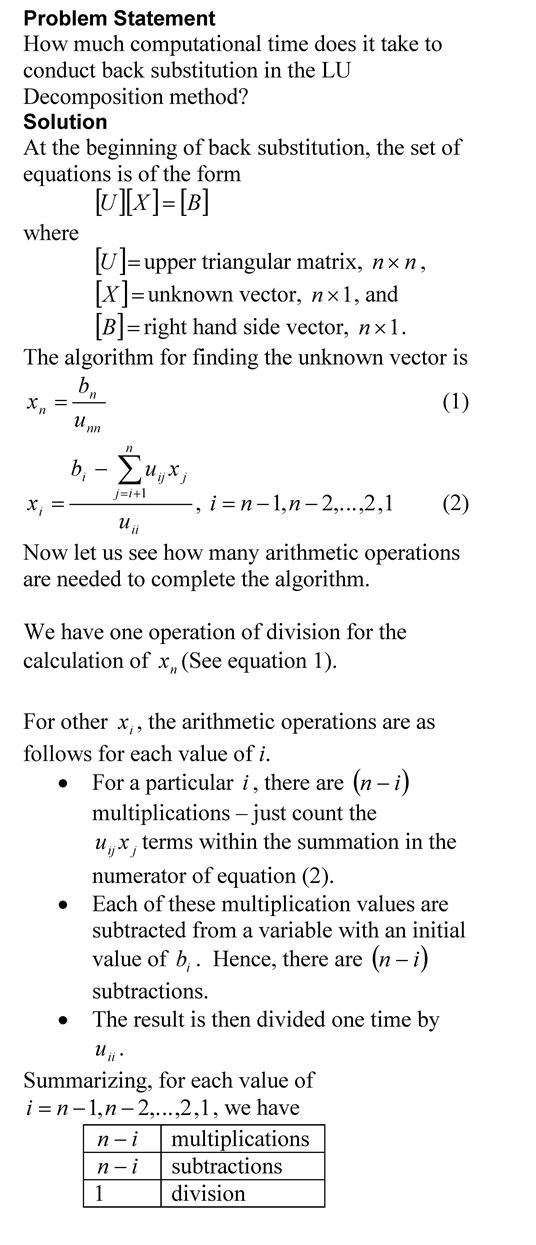

Problem Statement

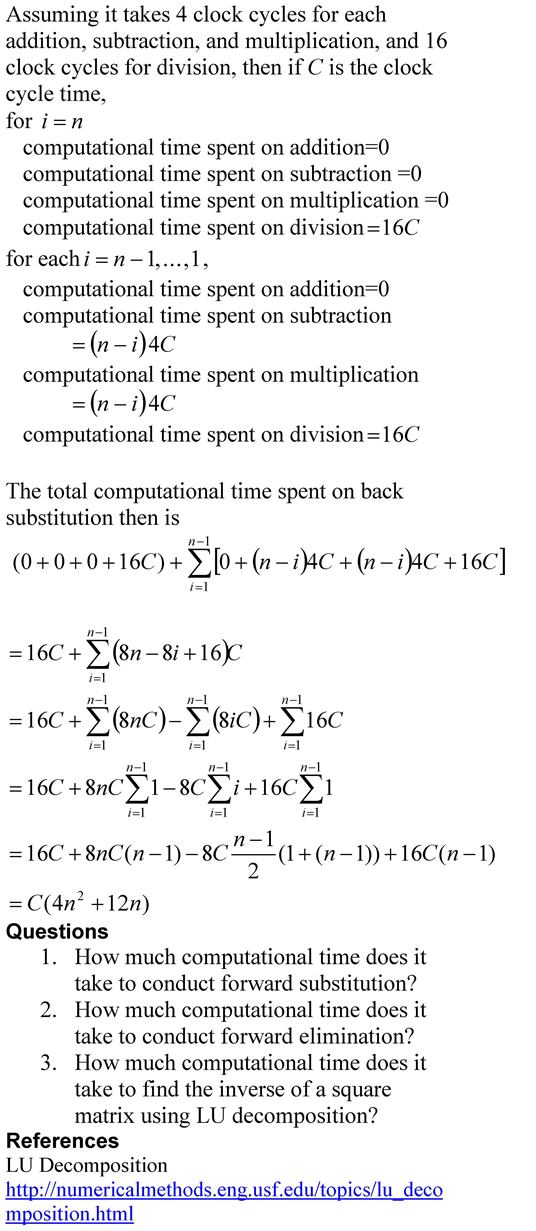

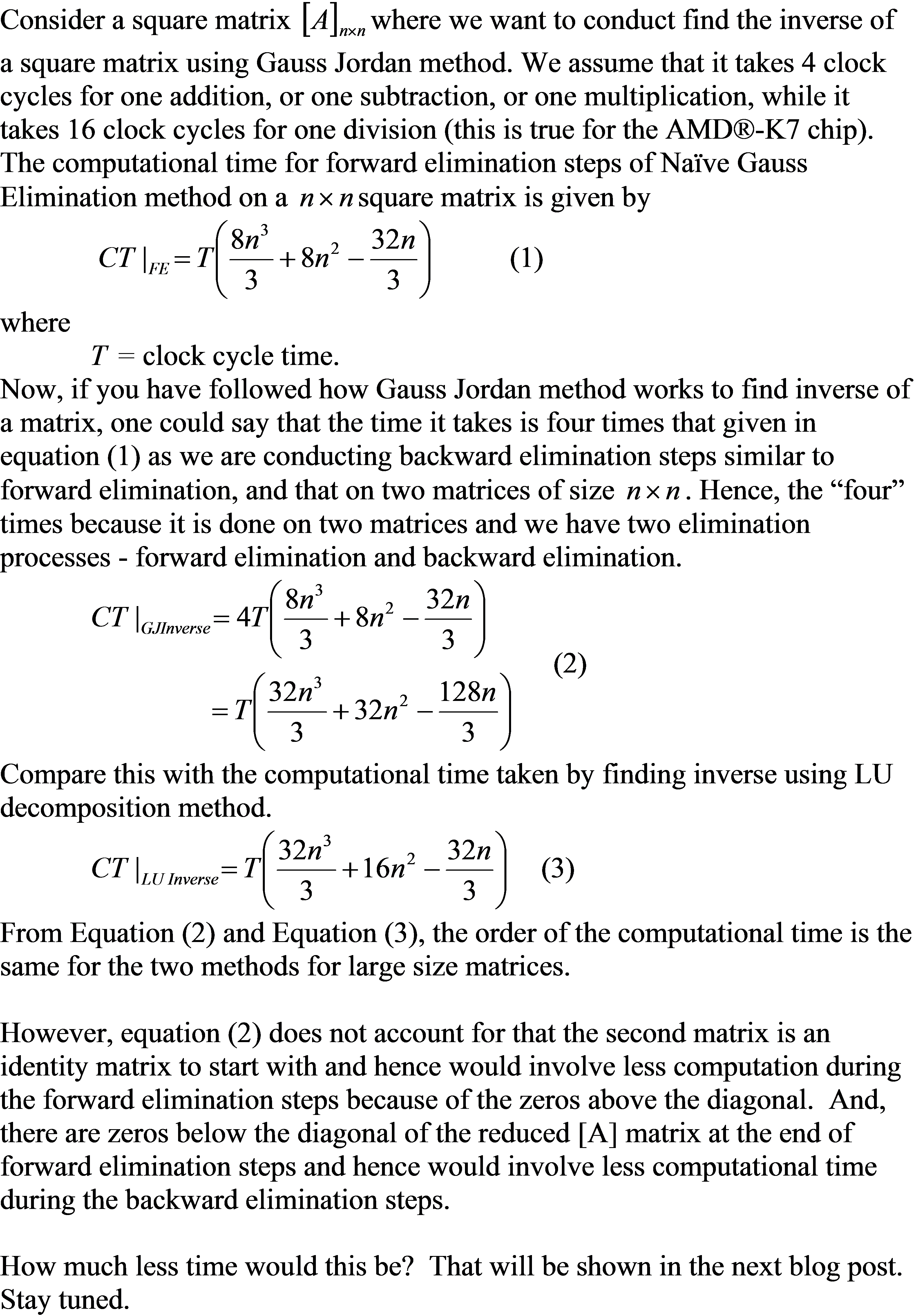

How much computational time does it take to find the inverse of a square matrix using Gauss Jordan method? Part 1 of 2.

Solution

To understand the solution, you should be familiar with the Gauss Jordan method of finding the inverse of a square matrix. Peter Young of UCSC describes it briefly in this pdf file while if you like watching an example via a video, you can see PatrickJMT doing so. You also need to read a previous blog where we calculated the computational time needed for the forward elimination steps on a square matrix in the Naïve Gauss elimination method. We are now ready to estimate the computational time required for Gauss Jordan method of finding the inverse of a square matrix.

This post is brought to you by

- Holistic Numerical Methods Open Course Ware:

- Numerical Methods for the STEM undergraduate at http://nm.MathForCollege.com;

- Introduction to Matrix Algebra for the STEM undergraduate at http://ma.MathForCollege.com

- the textbooks on

- the Massive Open Online Course (MOOCs) available at